2-Step Verification Smart Safe

PROBLEM:

Many standard safes only require one stage of security which is usually a simple pin-pad before accessing the safe. This can easily be hacked by thieves if they gain possession of the pin.

SOLUTION:

In order to prevent easy entry, we’ve created a smart safe that not only requires a pin but also requires facial recognition as a second stage of security.

OVERVIEW:

The Smart Safe project is a multi-factor authentication system using advanced technology such as a digital pin-pad and facial recognition to ensure robust security. The safe will require two forms of verification before granting access to a user. The first will be a unique pin that is assigned to a person and the second will be facial identification in respect to the pin entered. The safe will also be able to accommodate authentication information for multiple people. If a non-valid user attempts to access the Smart Safe it will display a "NO SUCH USER" message on the digital pin pad. Furthermore, if the non-valid user manages to enter a valid pin, they will not pass the second stage of verification as it will be looking for a familiar face.

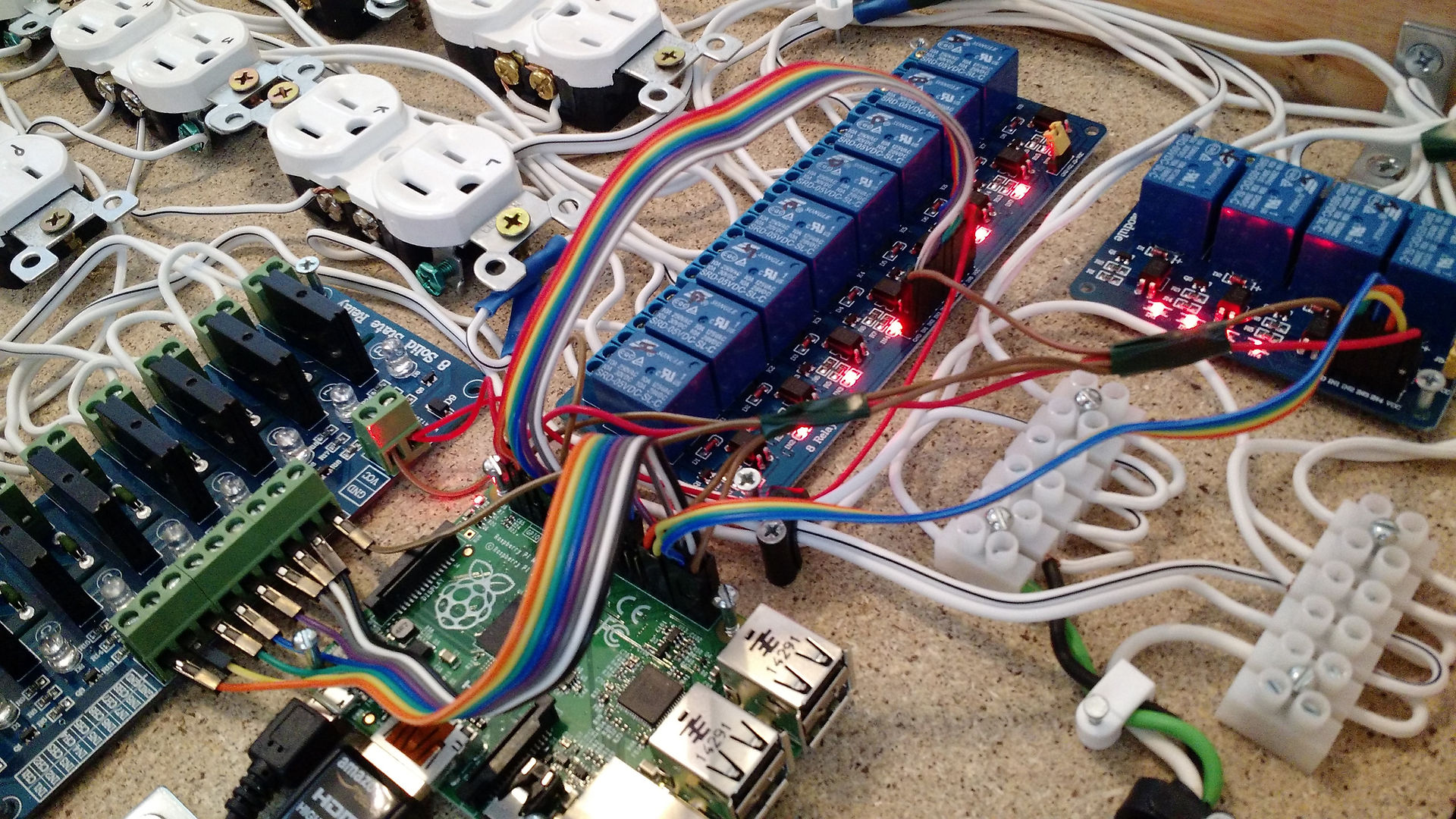

To successfully complete this project we will need to determine which programming language we would like to program our software with and which equipment will be required. We committed to use the Raspberry Pi 3 as our main microprocessor and Python 3 as our script language. All other components and materials used will be described and listed in the 'Components Used' section under the 'Design Considerations' tab.

This project is targeted to serve households, businesses or hospitals to protect valuable items, important documents, or irreplaceable objects.

DESIGN CONSIDERATIONS:

FLOW CHART

.png)

COMPONENTS USED

In order to achieve the goals laid out in our project description we plan to use the following components:

Raspberry Pi 3 (RPi 3)

The Raspberry Pi has been decided to be our micro-controller because it helps easily satisfy multiple requirements. First, the Raspberry Pi has a built in wi-fi module allowing us to set up the text and email services. Second, the RPi 3 is designed with a built camera connection allowing us to add a camera to our system without sacrificing any general purpose input/output pins. Furthermore, since the RPi also has a 400 MHz GPU built into into it we will be able to process our images and achieve facial recognition.

Raspberry Pi Camera

As mentioned above, this camera is specifically designed for the Raspberry Pi making compact video integration simple.

4" TFT LCD Touch Display

The LCD display will be used as a means of interacting with the pin-pad and displaying camera input during the facial recognition process. The reason we choose the 4" LCD Touch Display is because it gives the safe a clean and advanced aesthetic look. It also allows us to use graphical user interface applications to display anything we'd like.

Electric Strike Lock

The electric strike lock will be our locking mechanism that will be power by a 12 V supply. It is perfect for our project since it executes an in and out motion when unlocking and locking.

Relay Switch

The relay switch will be enabled by one of the Raspberry Pi GPIO pins that will perform a short circuit between the 12 V power supply and the electric strike lock. This component is essential to our project because it the RPi only outputs 5 V and the lock requires 12 V to turn on.

Buck Converter

The buck converter will be used to step down the power source voltage from 12 V to 5 V in order to power the Raspberry Pi.

Voltage Step Down Converter

The step down converter will perform the same action as the buck converter by stepping down the power source voltage from 12 V to 5 V. It will be used to power the relay switch.

12V Power Supply

The 12 V and 5 A power supply will be our main source to power all the components listed above. It will be required to be connected to a wall outlet.

Safe Box

This safe box will be used to keep the valuable items secured. The door of the box will hold all of the components in place and the power supply will enter from the back panel. This also a perfect box for us to use because the layers are thin, allowing us to make easier cuts to place the LCD touch screen.

SCHEMATIC

GRAPHICAL USER INTERFACE (GUI):

GUI USING TKINTER

In order to make this safe more technologically advanced than other ordinary safes, we wanted to add an LCD touch screen display as our main interface. I (Andres) took on the role to work on the LCD for our project, however, I had never had experience working with LCDs in the past. So I began doing some research online and discovered a commonly used GUI called Tkinter. I also found out that Tkinter would actually be perfect for our project because it's libraries come pre-installed on the Raspberry Pi. Therefore eliminating the hassle of trying to download files and finding a decent tutorial to that would guide me through it.

Now that I knew about Tkinter, I now had to learn how to use it. Luckily I was able to find a chapter online about the basics on how to use Tkinter. It taught me everything I needed to know such as: creating a root window, adding different types of widgets, and placing them on the window with the grid geometry manager. I also did a few simple LED projects using Tkinter as practice before beginning to work on our actual project.

HOW THE DESIGN CAME TO BE

1. Home Screen Window

This was the least challenging step in comparison to the other windows since it did not require too many widgets. I began by creating a 320 x 480 root window that would play as the main menu of the system. The dimensions stated is the size of the touch screen LCD, therefore this would make the root window fill the display completely. I added a button to fill those dimensions of the window so that when someone touches anywhere on the screen, the button is pressed causing the proceeding window to open. I also added a simple label on the button stating "Welcome to Smart Safe! Touch Screen to Continue".

2. Pin-Pad Window

I wanted the second window to be the first step of verification where the user enters his/her pin on a pin-pad. In order to execute this, I created an x and y grid for the entire window. I then placed desired widgets at specific coordinates to create a pin-pad within the display. For instance, I made the 1 Button to be at (x =0, y = 4) and the 2 Button to be at (x =1, y = 4) and the 4 Button to be at (x =0, y = 3). I then created a function called "face_verification" that is executed when a unique four digit pin is entered on the pin-pad. My pin was "1234", therefore whenever I would enter "1234", it would execute the face_verification function. That function would then open the following window which is the second step of verification. This step was a bit more challenging than the first task and a lot more time consuming since I needed to display 16 buttons and have each one execute their own outcome.

3. Facial Recognition Window

I knew this would be the toughest software challenge of the project since I now needed to integrate Tkinter with facial recognition. I had no idea really on how I was going to go about this. I didn't even know how to make the camera feed show up on the window or if Tkinter even supported the camera. So I began doing some additional research and discovered the frame widget. This widget can be used to output images or video coming from a camera. So we initially tried to display an image taken from the camera on the frame widget. Afterwards we attempted to show video stream in the frame to see if Tkinter allowed it, and luckily it did. Now that we had camera feed being displayed on the window, we now had to link that feed with the facial recognition features. That process is explained by Karnveer in the following tab "Facial Recognition". To generate the last and final access window, the system would have to 1) recognize the user whose pin was entered in the first stage of verification and 2) stay detected for more than 5 seconds. If these requirements are satisfied, it will execute the "access_granted" function that will create the final window of the system.

4. Access Granted Window

At this window I only needed to create three button widgets: UNLOCK, LOCK, and EXIT. It wouldn't make sense if I had the UNLOCK button shown when the electric strike is already opened, therefore I wanted the LOCK and UNLOCK buttons to replace each other depending on the state of the electric strike. For example, if the current state of the electric strike is closed and I pressed the UNLOCK button, the state of the electric strike is now opened and the UNLOCK button is replaced by the LOCK button and vice versa. For operation of each button, I added a command to each widget that jumps to their designated function. When the EXIT button is pressed, it destroys all the windows and takes you to a loading screen where you then need to wait a few seconds for the system to boot up again. We also added a safety feature in the EXIT button's function where if you forget to accidentally lock your safe, the EXIT button will lock it for you by closing the electric strike.

FACIAL RECOGNITION

RESEARCHING FACIAL RECOGNITION

In order to have a more robust form of security other than the standard keypad pin we decided to add facial recognition. This decision was also strongly motivated by our desire to learn a new skill throughout the course of this project. Since I (Karnveer) had no experience with facial recognition or computer vision software I took to google in order to research. I came across many tutorials and examples to help guide my learning process however pyimagesearch.com was a particularly invaluable resource. A gentleman by the name of Adrian Rosebrock maintains the site and does a wonderful job of explain difficult concepts with depth and accuracy, I highly recommend checking out his content for help with anything image processing related.

In my research I was able to break the problem down into two important steps. The first task was to detect a face in a given image. Second, we would compare that face to a local database of faces to check if it was a known or unknown face.

THE PROCESS

1. Installing the Necessary Software

In order to perform facial recognition we needed a handful of very important libraries. Some these libraries included imutils, PIL, pickle, and numpy. First we have imutils an image processing library which helps make basic image manipulations much easier with OpenCV. Then we have PIL which also helps format images however this one is used to help format the image to be displayed on our GUI. Next we have pickle which is used to serialize and de-serialize python objects, it is what we used to read our file of know facial vectors. Numpy was used in conjunction with OpenCV to perform many of the math operations necessary for OpenCV to run. Lastly, we had OpenCV and pythons face_recognition libraries which is what mostly enabled us to perform the facial detection and recognition. I will talk more about these two later.

2. Getting Images

Before we could do any processing, we first needed a camera to capture images. This was easily achieved by using the Raspberry Pi 3 along with a RaspberryPi Camera. In our software we would grab an image from the camera then began looking for faces in the image.

3. Finding and Recognizing Faces

Now that we had all our necessary software installed and images coming, we are ready to detect faces. To do this we used OpenCV to deploy a Haar feature-based cascade classifier trained to detect frontal faces. The cascade classifier sweeps across the images and detects if any faces are in the frame. After this we get a list of faces that we found in the frame. Each face is then isolated and transformed into a vector of 128-dimensions which is what we use to compare the detected face to our local database of known faces. Before moving forward, it is important to mention that prior to processing new images we had taken images of our own faces to make our “known faces” and also encoded those into vectors of 128-dimensions. When we compare our faces to our local database, this vector of known faces is what I am talking about. Using the face_recognition library we compare our newly generated vector to our known facial vectors and look for a match. If the match is ‘True’ we then labeled the image with the name belonging to the face before passing it to the output.

.png)

RESULTS:

With a lot of time, effort and debugging we where able to produce a fully functional facial recognition safe. Some of the major challenges we hit included installing software, specifically OpenCV, merging software together, powering everything appropriately, and just being careful.

Installing OpenCV can be a very time consuming and involved process and in our case we had problems installing when using the quick pip-install method. In order to have a successful installation we had to install via CMake which can be a 4 hour installation process as opposed to the 20 min pip-install method. However this gave us a more complete installation along with the ability to change parameters regarding how many processor cores to use.

After we (Andres and Karnveer) had finished our codes for the GUI and facial recognition is was time to merge the two programs together. This has always been a challenge for me in the past so we allocated a few days for us to deal with any issues that were bound to arise. For example we did not know to use a library called PIL in order to format images to be displayed via a tkinter GUI. Furthermore, due to the GUI interface we had to embed the facial recognition software in a function that could be appropriately called by tkinter.

Once we had all our software together we figured we should be getting close to the finish line, however we forgot to consider the issues associated with powering our hardware appropriately. We attempted to power our Raspberry Pi by plugging in directly to the 5V supply line which worked however we suddenly not able to power the LCD screen appropriately. This was because when the RPi is powered via USB is passes through protection circuity that allows us Raspberry Pi to output more current which we needed. We also needed to power our relay separately since it was using an electro-mechanical switch that pulled more power than the Raspberry Pi was able to continuously output.

Since we were not careful while mounting/moving our hardware around we ended up cracking our SD card which had all of our software set up. This was a major blow to our progress, however we were able to bounce back quickly since we strategically backed up various version of our code as well as any necessary files need to run things properly. Because we were well prepared we were back up and running in a day and a half with most of the time being spent reinstalling OpenCV.

If we were to upgrade our project we would want to add features to text the owner of the safe when a unknown user shows up and also increase the quality of our video stream.